adopy.tasks.dd

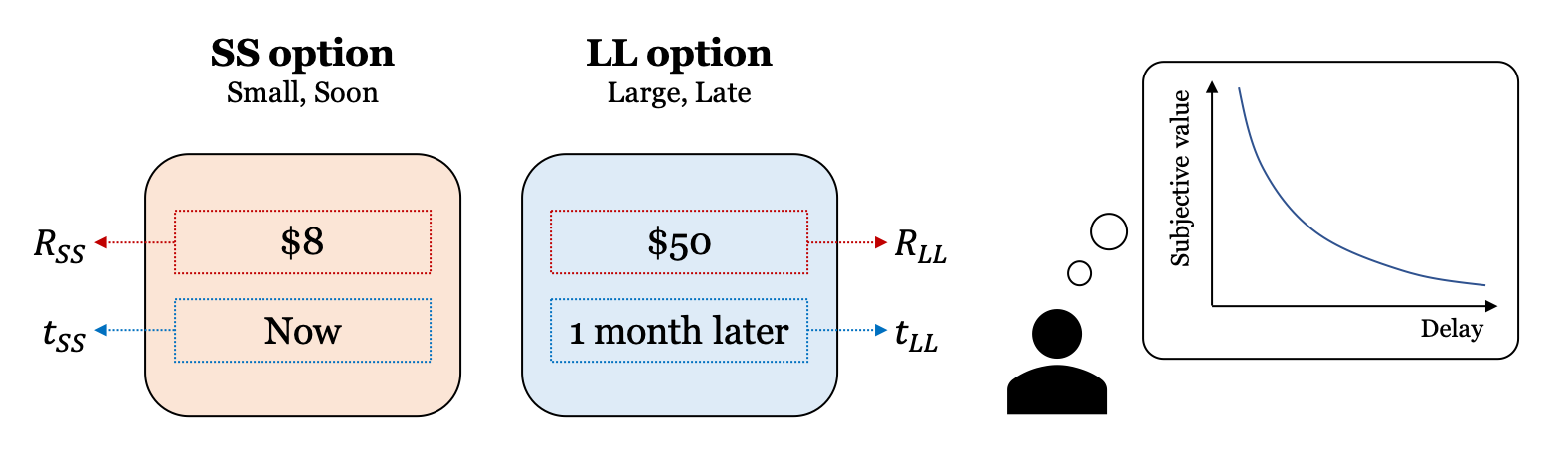

Delay discounting refers to the well-established finding that humans tend to discount the value of a future reward such that the discount progressively increases as a function of the receipt delay (Green & Myerson, 2004; Vincent, 2016). In a typical delay discounting (DD) task, the participant is asked to indicate his/her preference between two delayed options: a smaller-sooner (SS) option (e.g., 8 dollars now) and a larger-longer (LL) option (e.g., 50 dollars in 1 month).

References

Green, L. and Myerson, J. (2004). A discounting framework for choice with delayed and probabilistic rewards. Psychological Bulletin, 130, 769–792.

Vincent, B. T. (2016). Hierarchical Bayesian estimation and hypothesis testing for delay discounting tasks. Behavior Research Methods, 48, 1608–1620.

Task

- class adopy.tasks.dd.TaskDD

Bases:

TaskThe Task class for the delay discounting task.

- Design variables

t_ss(\(t_{SS}\)) - delay of a SS optiont_ll(\(t_{LL}\)) - delay of a LL optionr_ss(\(R_{SS}\)) - amount of reward of a SS optionr_ll(\(R_{LL}\)) - amount of reward of a LL option

- Responses

choice- 0 (choosing a SS option) or 1 (choosing a LL option)

Examples

>>> from adopy.tasks.ddt import TaskDD >>> task = TaskDD() >>> task.designs ['t_ss', 't_ll', 'r_ss', 'r_ll'] >>> task.responses ['choice']

Model

- class adopy.tasks.dd.ModelExp

Bases:

ModelThe exponential model for the delay discounting task (Samuelson, 1937).

\[\begin{split}\begin{align} D(t) &= e^{-rt} \\ V_{LL} &= R_{LL} \cdot D(t_{LL}) \\ V_{SS} &= R_{SS} \cdot D(t_{SS}) \\ P(LL\, over \, SS) &= \frac{1}{1 + \exp [-\tau (V_{LL} - V_{SS})]} \end{align}\end{split}\]- Model parameters

r(\(r\)) - discounting parameter (\(r > 0\))tau(\(\tau\)) - inverse temperature (\(\tau > 0\))

References

Samuelson, P. A. (1937). A note on measurement of utility. The review of economic studies, 4 (2), 155–161.

Examples

>>> from adopy.tasks.ddt import ModelExp >>> model = ModelExp() >>> model.task Task('DDT', designs=['t_ss', 't_ll', 'r_ss', 'r_ll'], responses=[0, 1]) >>> model.params ['r', 'tau']

- compute(choice, t_ss, t_ll, r_ss, r_ll, r, tau)

Compute log likelihood of obtaining responses with given designs and model parameters. The function provide the same result as the argument

funcgiven in the initialization. If the likelihood function is not given for the model, it returns the log probability of a random noise.Warning

Since the version 0.4.0,

compute()function should compute the log likelihood, instead of the probability of a binary response variable. Also, it should include the response variables as arguments. These changes might break existing codes using the previous versions of ADOpy.Changed in version 0.4.0: Provide the log likelihood instead of the probability of a binary response.

- class adopy.tasks.dd.ModelHyp

Bases:

ModelThe hyperbolic model for the delay discounting task (Mazur, 1987).

\[\begin{split}\begin{align} D(t) &= \frac{1}{1 + kt} \\ V_{LL} &= R_{LL} \cdot D(t_{LL}) \\ V_{SS} &= R_{SS} \cdot D(t_{SS}) \\ P(LL\, over \, SS) &= \frac{1}{1 + \exp [-\tau (V_{LL} - V_{SS})]} \end{align}\end{split}\]- Model parameters

k(\(k\)) - discounting parameter (\(k > 0\))tau(\(\tau\)) - inverse temperature (\(\tau > 0\))

References

Mazur, J. E. (1987). An adjusting procedure for studying delayed reinforcement. Commons, ML.;Mazur, JE.; Nevin, JA, 55–73.

Examples

>>> from adopy.tasks.ddt import ModelHyp >>> model = ModelHyp() >>> model.task Task('DDT', designs=['t_ss', 't_ll', 'r_ss', 'r_ll'], responses=[0, 1]) >>> model.params ['k', 'tau']

- compute(choice, t_ss, t_ll, r_ss, r_ll, k, tau)

Compute log likelihood of obtaining responses with given designs and model parameters. The function provide the same result as the argument

funcgiven in the initialization. If the likelihood function is not given for the model, it returns the log probability of a random noise.Warning

Since the version 0.4.0,

compute()function should compute the log likelihood, instead of the probability of a binary response variable. Also, it should include the response variables as arguments. These changes might break existing codes using the previous versions of ADOpy.Changed in version 0.4.0: Provide the log likelihood instead of the probability of a binary response.

- class adopy.tasks.dd.ModelHPB

Bases:

ModelThe hyperboloid model for the delay discounting task (Green & Myerson, 2004).

\[\begin{split}\begin{align} D(t) &= \frac{1}{(1 + kt)^s} \\ V_{LL} &= R_{LL} \cdot D(t_{LL}) \\ V_{SS} &= R_{SS} \cdot D(t_{SS}) \\ P(LL\, over \, SS) &= \frac{1}{1 + \exp [-\tau (V_{LL} - V_{SS})]} \end{align}\end{split}\]- Model parameters

k(\(k\)) - discounting parameter (\(k > 0\))s(\(s\)) - scale parameter (\(s > 0\))tau(\(\tau\)) - inverse temperature (\(\tau > 0\))

References

Green, L. and Myerson, J. (2004). A discounting framework for choice with delayed and probabilistic rewards. Psychological Bulletin, 130, 769–792.

Examples

>>> from adopy.tasks.ddt import ModelHPB >>> model = ModelHPB() >>> model.task Task('DDT', designs=['t_ss', 't_ll', 'r_ss', 'r_ll'], responses=[0, 1]) >>> model.params ['k', 's', 'tau']

- compute(choice, t_ss, t_ll, r_ss, r_ll, k, s, tau)

Compute log likelihood of obtaining responses with given designs and model parameters. The function provide the same result as the argument

funcgiven in the initialization. If the likelihood function is not given for the model, it returns the log probability of a random noise.Warning

Since the version 0.4.0,

compute()function should compute the log likelihood, instead of the probability of a binary response variable. Also, it should include the response variables as arguments. These changes might break existing codes using the previous versions of ADOpy.Changed in version 0.4.0: Provide the log likelihood instead of the probability of a binary response.

- class adopy.tasks.dd.ModelCOS

Bases:

ModelThe constant sensitivity model for the delay discounting task (Ebert & Prelec, 2007).

\[\begin{split}\begin{align} D(t) &= \exp[-(rt)^s] \\ V_{LL} &= R_{LL} \cdot D(t_{LL}) \\ V_{SS} &= R_{SS} \cdot D(t_{SS}) \\ P(LL\, over \, SS) &= \frac{1}{1 + \exp [-\tau (V_{LL} - V_{SS})]} \end{align}\end{split}\]- Model parameters

r(\(r\)) - discounting parameter (\(r > 0\))s(\(s\)) - scale parameter (\(s > 0\))tau(\(\tau\)) - inverse temperature (\(\tau > 0\))

References

Ebert, J. E. and Prelec, D. (2007). The fragility of time: Time-insensitivity and valuation of thenear and far future. Management science, 53 (9), 1423–1438.

Examples

>>> from adopy.tasks.ddt import ModelCOS >>> model = ModelCOS() >>> model.task Task('DDT', designs=['t_ss', 't_ll', 'r_ss', 'r_ll'], responses=[0, 1]) >>> model.params ['r', 's', 'tau']

- compute(choice, t_ss, t_ll, r_ss, r_ll, r, s, tau)

Compute log likelihood of obtaining responses with given designs and model parameters. The function provide the same result as the argument

funcgiven in the initialization. If the likelihood function is not given for the model, it returns the log probability of a random noise.Warning

Since the version 0.4.0,

compute()function should compute the log likelihood, instead of the probability of a binary response variable. Also, it should include the response variables as arguments. These changes might break existing codes using the previous versions of ADOpy.Changed in version 0.4.0: Provide the log likelihood instead of the probability of a binary response.

- class adopy.tasks.dd.ModelQH

Bases:

ModelThe quasi-hyperbolic model (or Beta-Delta model) for the delay discounting task (Laibson, 1997).

\[\begin{split}\begin{align} D(t) &= \begin{cases} 1 & \text{if } t = 0 \\ \beta \delta ^ t & \text{if } t > 0 \end{cases} \\ V_{LL} &= R_{LL} \cdot D(t_{LL}) \\ V_{SS} &= R_{SS} \cdot D(t_{SS}) \\ P(LL\, over \, SS) &= \frac{1}{1 + \exp [-\tau (V_{LL} - V_{SS})]} \end{align}\end{split}\]- Model parameters

beta(\(\beta\)) - constant rate (\(0 < \beta < 1\))delta(\(\delta\)) - constant rate (\(0 < \delta < 1\))tau(\(\tau\)) - inverse temperature (\(\tau > 0\))

References

Laibson, D. (1997). Golden eggs and hyperbolic discounting. The Quarterly Journal of Economics, 443–477

Examples

>>> from adopy.tasks.ddt import ModelQH >>> model = ModelQH() >>> model.task Task('DDT', designs=['t_ss', 't_ll', 'r_ss', 'r_ll'], responses=[0, 1]) >>> model.params ['beta', 'delta', 'tau']

- compute(choice, t_ss, t_ll, r_ss, r_ll, beta, delta, tau)

Compute log likelihood of obtaining responses with given designs and model parameters. The function provide the same result as the argument

funcgiven in the initialization. If the likelihood function is not given for the model, it returns the log probability of a random noise.Warning

Since the version 0.4.0,

compute()function should compute the log likelihood, instead of the probability of a binary response variable. Also, it should include the response variables as arguments. These changes might break existing codes using the previous versions of ADOpy.Changed in version 0.4.0: Provide the log likelihood instead of the probability of a binary response.

- class adopy.tasks.dd.ModelDE

Bases:

ModelThe double exponential model for the delay discounting task (McClure et al., 2007).

\[\begin{split}\begin{align} D(t) &= \omega e^{-rt} + (1 - \omega) e^{-st} \\ V_{LL} &= R_{LL} \cdot D(t_{LL}) \\ V_{SS} &= R_{SS} \cdot D(t_{SS}) \\ P(LL\, over \, SS) &= \frac{1}{1 + \exp [-\tau (V_{LL} - V_{SS})]} \end{align}\end{split}\]- Model parameters

omega(\(r\)) - weight parameter (\(0 < \omega < 1\))r(\(r\)) - discounting rate (\(r > 0\))s(\(s\)) - discounting rate (\(s > 0\))tau(\(\tau\)) - inverse temperature (\(\tau > 0\))

References

McClure, S. M., Ericson, K. M., Laibson, D. I., Loewenstein, G., and Cohen, J. D. (2007). Time discounting for primary rewards. Journal of neuroscience, 27 (21), 5796–5804.

Examples

>>> from adopy.tasks.ddt import ModelDE >>> model = ModelDE() >>> model.task Task('DDT', designs=['t_ss', 't_ll', 'r_ss', 'r_ll'], responses=[0, 1]) >>> model.params ['omega', 'r', 's', 'tau']

- compute(choice, t_ss, t_ll, r_ss, r_ll, omega, r, s, tau)

Compute log likelihood of obtaining responses with given designs and model parameters. The function provide the same result as the argument

funcgiven in the initialization. If the likelihood function is not given for the model, it returns the log probability of a random noise.Warning

Since the version 0.4.0,

compute()function should compute the log likelihood, instead of the probability of a binary response variable. Also, it should include the response variables as arguments. These changes might break existing codes using the previous versions of ADOpy.Changed in version 0.4.0: Provide the log likelihood instead of the probability of a binary response.